Jun Ye’s latest research uses GenAI for neural interface systems to boost brain-computer accuracy and aid storytelling for child literacy.

Neural interface systems face persistent challenges in decoding weak EEG signals with speed and accuracy. Jun Ye’s latest research uses GenAI and advanced learning models to overcome these barriers. This achieves near-perfect decoding accuracy while also applying AI creativity to education.

Published in the International Journal of Big Data Intelligent Technology, Ye’s framework integrates attention-supervised learning with L2 regularization. This reaches a 96.87% accuracy in EEG-to-text conversion. The system filters out poor-quality brain data using real-time attention tracking and compresses 31 signal classes into five optimized categories. This ensures cleaner, more efficient neural decoding for brain–computer interfaces (BCIs). The model’s MLSTM architecture fuses mapping modules with memory control gates, preventing overfitting and maintaining cross-user accuracy.

Beyond technical advancement, Ye’s work demonstrates real-world impact in assistive technology. His attention-driven BCI translates imagined handwriting into text, allowing individuals with motor impairments—such as stroke or ALS patients—to communicate effectively. The generative model’s precision enables responsive, real-time text generation that adapts to user concentration levels.

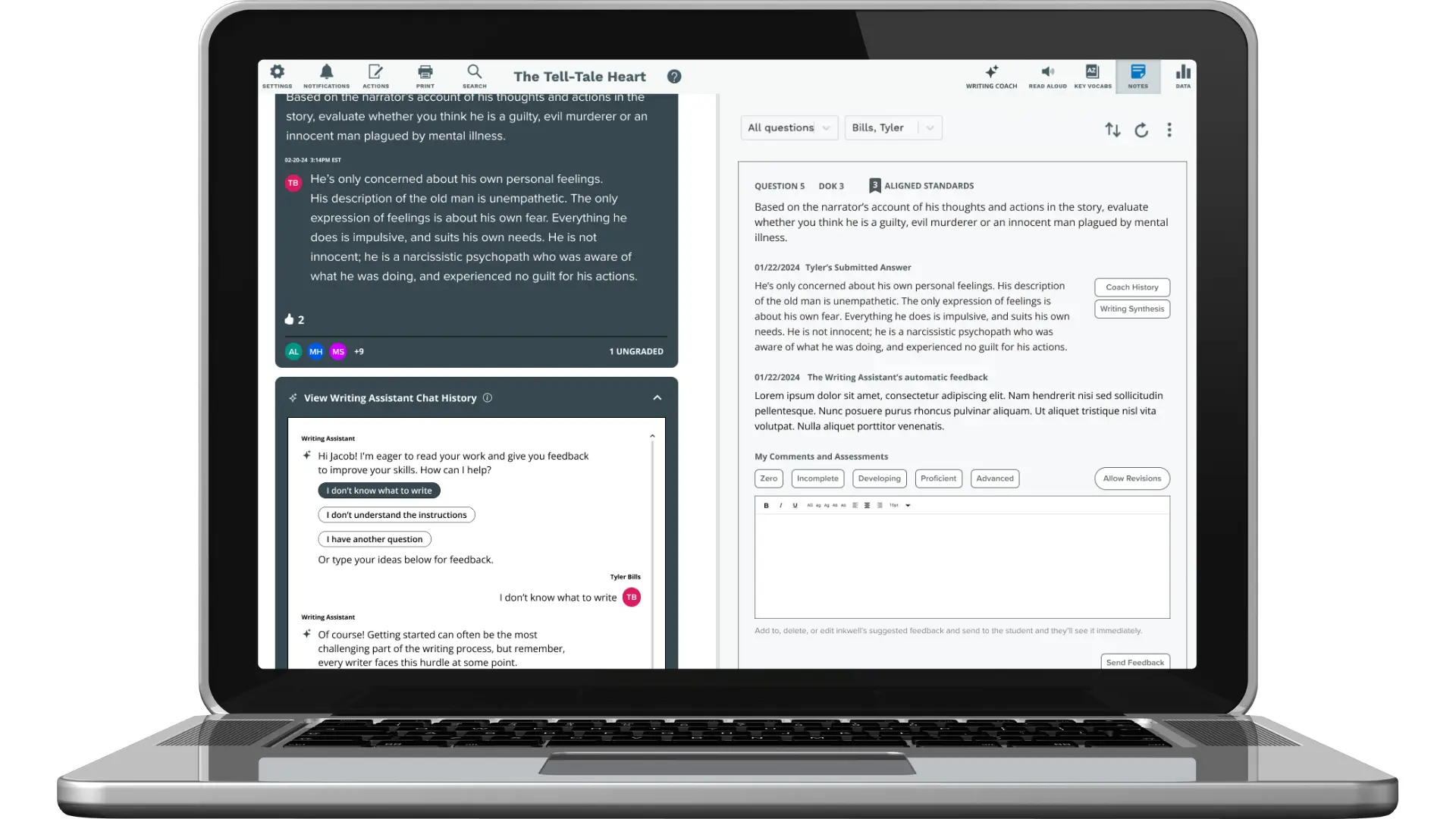

Extending this innovation to education, Ye developed Sound Pages, a GenAI-powered app that converts children’s voice prompts into illustrated, narrated storybooks. The system uses a multi-agent generative pipeline. With AI roles for writing, illustration, and audio, to create personalized, safe, and interactive stories. Children can tap words for pronunciation, learn vocabulary, and engage through comprehension cues. The app highlights how GenAI can merge creativity, empathy, and technical precision to enhance learning.

Jun Ye’s combined GenAI and neural-interface research marks a leap in both human–machine communication and AI-assisted literacy. It bridges neuroscience, language modeling, and creativity—showing how generative systems can decode thought and imagination alike.